How marketers can navigate the legal pitfalls of generative AI

WASHINGTON — Generative AI remains at the center of conversations in the worlds of technology and advertising, with advancements continuing to roll out from tech giants, agency holding companies and marketing consultancies. While causing some uncertainty about job futures, the technology is also seen by marketers as a balm for burnout and a way to boost investment in creator content.

Amid all of these applications and experiments, generative AI still faces a raft of legal issues and practical pitfalls that marketers must navigate while integrating the tech into their operations. Those concerns were the topic of a panel at the IAB Public Policy and Legal Summit in April.

Panelists also clarified some definitional distinctions that marketers must understand, especially as agencies, ad-tech providers and other platforms rush to adopt generative AI while rebranding and highlighting AI functionality that has been part of the ad industry for more than a decade.

“You've probably been using machine learning and deep learning to segment your audience, to develop ad budgets, to place ads, to understand what type of viewers may be more responsive to particular types of advertisements,” said Dera Nevin, managing director at FTI Consulting. “Machine learning and deep learning has been used in the advertising industry for a long time … and now we're starting to see the use of generative AI to generate content.”

Cooking with AI

To understand AI, Nevin suggested a controlling metaphor around cooking wherein algorithms are recipes, data inputs are ingredients and generated outputs are prepared food. While machine learning is a simple recipe, the deep learning that drives large-language models and generative AI is a much more complicated one. As in the kitchen, the final product is only as good as the ingredients, and the data which AI is trained on limits the efficacy and accuracy of the output.

“In order to really understand what kind of food you're going to get when the recipes interact with the ingredients, you actually need to know what's in the kitchen and who's preparing it,” Nevin said. “But there's often little transparency behind what the [recipe] is or what the ingredients are. Without knowing that, you just don't know what kind of food … is going to come out.”

Agencies and brands should be concerned about what data they are inputting as generative AI prompts as well as the output that is generated. When using public-facing generative AI tools like ChatGPT, that data becomes part of the algorithm's data set — whether it is confidential, personal or otherwise private.

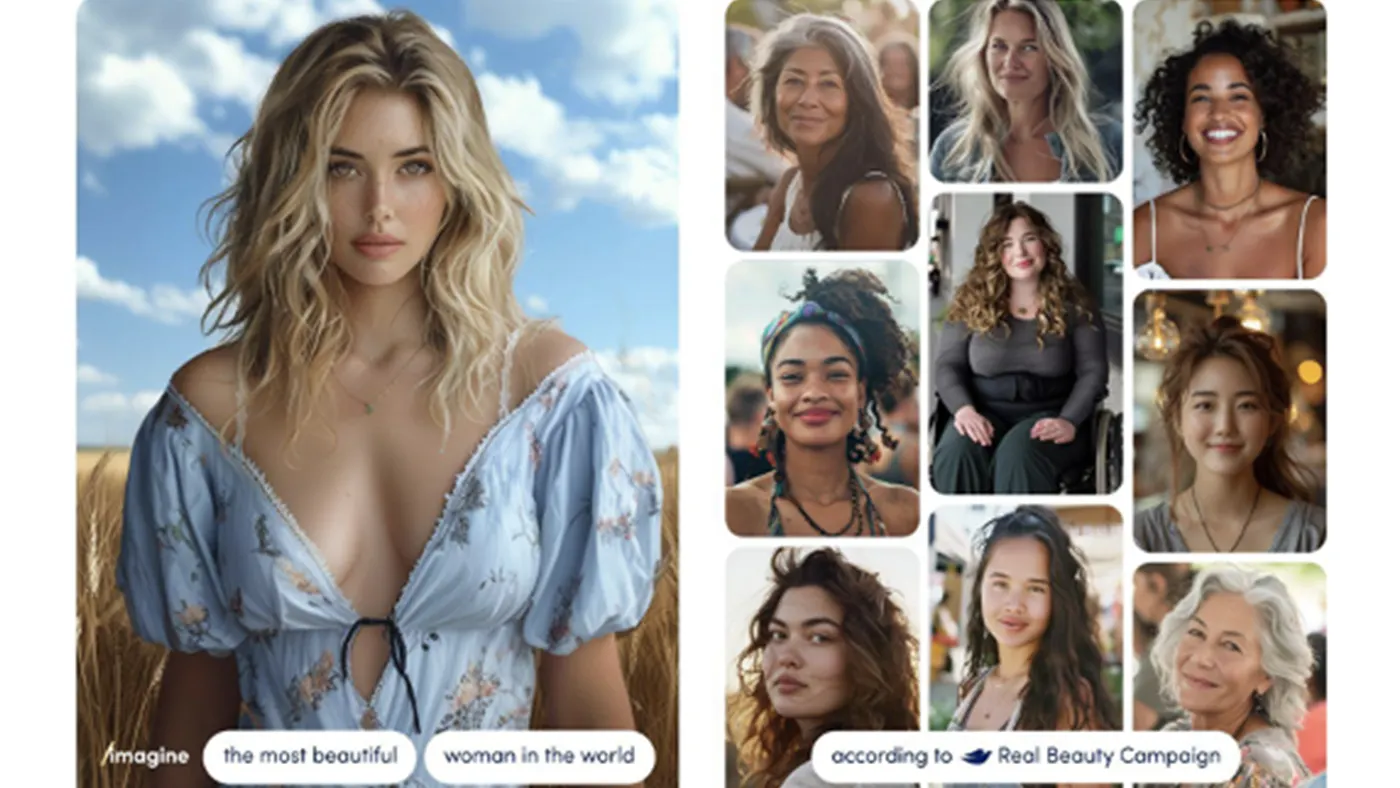

When considering output, marketers should be wary of acontextual content that is generated when AI doesn't understand context, resulting in output that can be embarrassing to brands. Plus, in its attempts to simulate human behavior, AI can click or “behave” as human beings, driving incorrect metrics or understandings of engagement.

“Hallucination” has become a popular way to describe the unexpected output generated by AI, but Nevin pushed back on the term as it attributes human characteristics to technology. So-called hallucinations are happening due to underlying math and probabilities; the technology is doing what it is designed to do, but does not have the human ability to create original ideas.

“A human being combined two concepts to come up with ‘Sharknado.' I don't know that an AI could do that," she said. "But an AI could come up with very credible ‘Sharknado 2’, ‘3’, ‘4’ and ‘5.’"

Generating opportunities

Much of the last year has been focused on the threat vectors of generative AI, including concerns around publisher and ad traffic, compensation for the inclusion of copyrighted material in large-language models and signaling protocols for determining what should and should not be allowed to train AI models, explained IAB CEO David Cohen in an interview at the summit. As the industry begins to solve for those threats, brands and agencies can focus on the opportunity vectors.

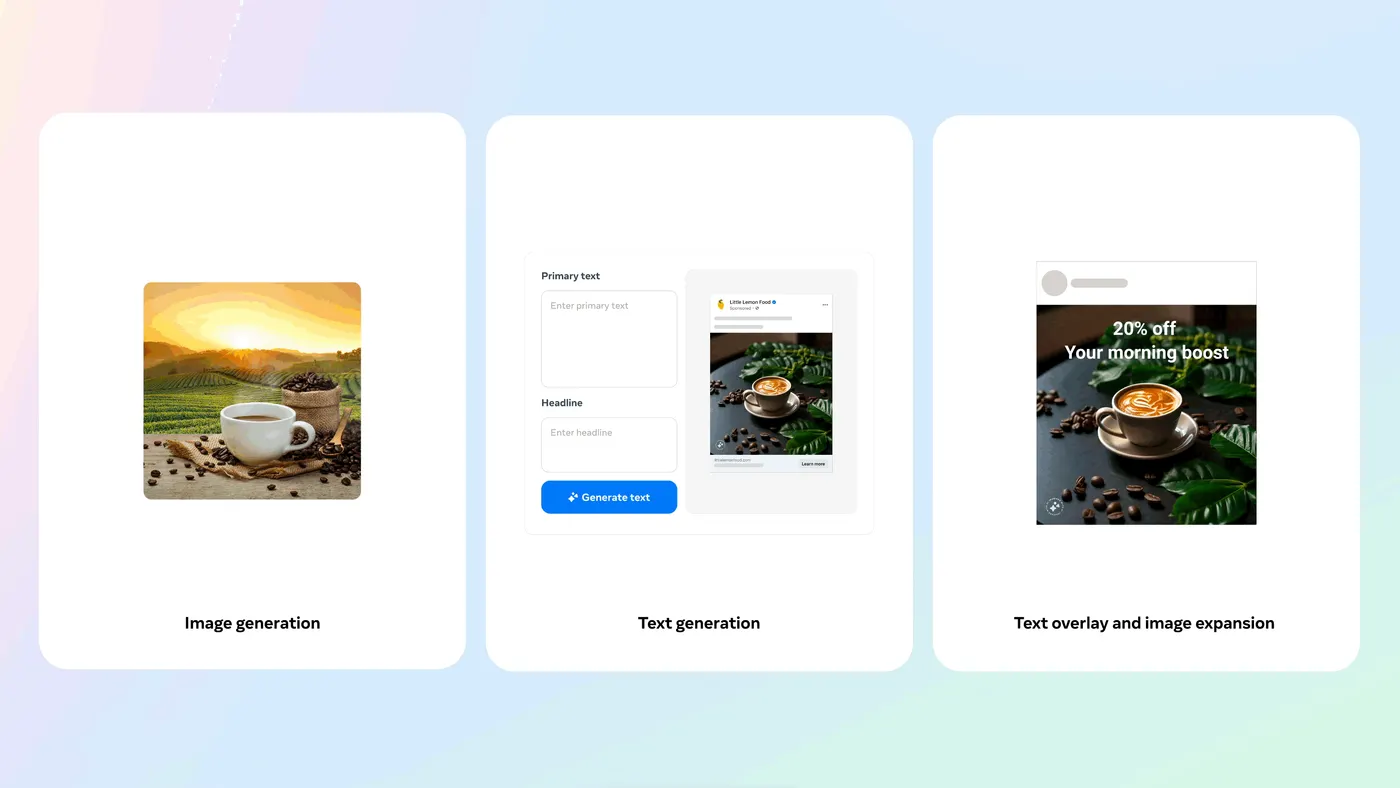

“How do we use all of this for creative efficiency, workflow efficiency, making our business more agile, adaptable and efficient? There's tons and tons of work that's going on there on the creative side,” Cohen said. “The opportunity [piece] is what the next 12 to 24 months will look like.”

For marketers, some of those opportunities might be better served by looking to tech company offerings that protect brand safety more effectively than public-facing tools. For example, Adobe and its Firefly platform can be trained on a brand's assets — rather than publicly scraped data — giving the outputs better resonance with the brand, explained Matt Savare, a partner at Lowenstein Sandler, LLP, during the panel. Plus, companies like Adobe, Google and Shutterstock have announced plans to indemnify users against third-party intellectual property claims, protecting smaller brands and agencies from legal peril.

Still, those opportunities might have to wait as brands and agencies triage more pressing concerns, like the deprecation of third-party cookies, first-party data strategies and Google's Privacy Sandbox proposals, Cohen explained.

Whether generative AI will eventually deliver on the loftiest promises of its biggest boosters and be able to create high-level, creative campaigns remains to be seen. Other technology has revolutionized so many parts of the advertising ecosystem in previously unbelievable ways, and generative AI could be the next miracle tool — one day.

“The generation that's coming up is going to be doing what we do in extremely novel ways, and I wouldn't be surprised if we get to precision advertising [with AI] at some point," Nevin said. "I just don't know how quickly that's going to come.”